A mixed approach in using Azure Functions

Web-Applications are often composed not only by human-triggered services, like html pages but even by automatically-triggered services, like scheduled maintenance processes, nightly reports, status emails, etc. For the latter, we need a scheduler and often this force us to host these services inside a Windows service or a Linux daemon, making the deployment more problematic and hosting more complex. Azure Functions can help.

A lot of examples highlight a common pattern in using Azure Functions which is to place and run the application-logic in the function itself. This is absolutely not wrong and is the best approach in many cases but there are cases where we want to place and run the application-logic in a traditional environment, like a web-application hosted on-premises or an Azure App Service. In those cases, the operations are usually triggered by HTTP calls: soap, rest, http get, etc. But hosting directly on a web-application lacks very important features like timer-triggers / scheduler and queue services to process items in an asynchronous way. Azure Functions can be used to play the role of external scheduler and processor of “command” stored on queues.

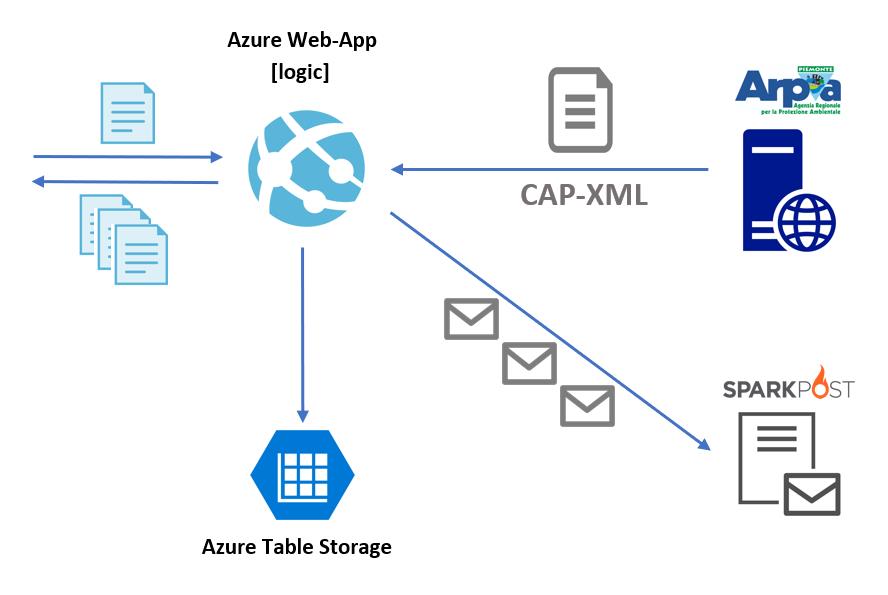

I have tried this mixed approach in a simple use-case I faced in the past with different solutions and platforms. The regional environment protection public agency, ARPA Piemonte in Italy, regularly publishes a daily bulletin about hydro-meteorological risks for the area of its competence, the Piedmont region in Italy. The regional area is further divided in 11 sub-zones, to give more detailed and relevant information. The bulletin is released as pdf file - for human accessibility - and as a XML data file - for system integration - following the CAP-XML standard.

The idea is to regularly check for a new bulletin, get it and deliver it by email to subscribers. Every subscriber will receive the bulletin for the zone he/she subscribed to. Optionally, the subscriber can choose to receive the bulletin every day or only in case of a real alert (“yellow” alert level or beyond).

The whole activity is split into simpler and atomic activities, the “commands”, such as getting the data from ARPA, processing xml data, preparing emails content, delivering email to subscribers based on their area of interest, etc. Every command is small piece of JSON like:

{"Name":"CMD_SEND_EMAIL","Parameters":["13_2019","Piem-L","user@domain"]}

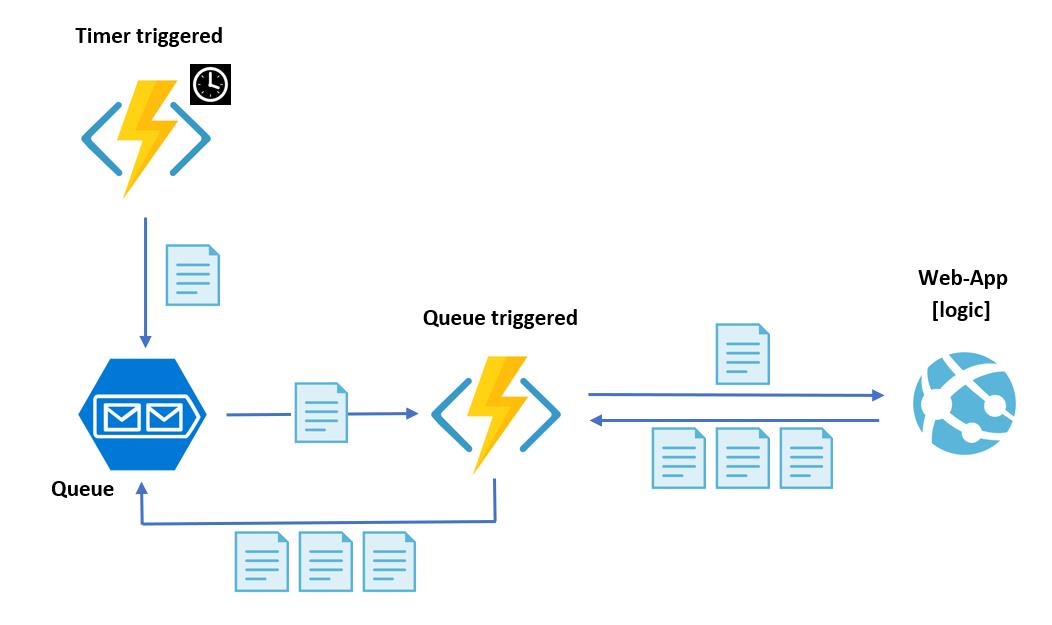

Every command is stored on an Azure Queue as a message. When a message is available, it triggers an Azure Function that receives the command as input, calls the web-application passing the json and receives a list of json commands as response. Every received command is enqueued on the same queue.

The timer scheduled function, scheduled every 15 minutes, is responsible for creating the first command that checks for a new bulletin: {“Name”:”GET_BOLL”}

The web-application logic, exposed as plain HTTP endpoint, only runs when called by Azure function and receives a command as JSON string. It executes the command and returns other related commands, if required. Example: if execution of “GET_BOLL” finds a new bulletin available, it returns a command “START_DELIVERY”. Every execution of a command can return an array of commands. Example: a list of command, one for each subscriber like: {"Name":"CMD_SEND_EMAIL","Parameters":["13_2019","Piem-L","user@domain"]}

Processed bulletin data, subscribers’ information, logging, etc. are stored in Azure Tables. The emails are delivered through SparkPost service.

Using Azure Functions and a queue-based approach, allows the service to scale in case of increase of subscribers. Resource allocation is optimized and only used when really needed, i.e. when a new bulletin is available, normally around 13:00. For the rest of the day, only a cheap and fast check is performed every 15 minutes.

The use of a mixed-approach allows you to keep the logic inside the web-application and delegate to the Azure Functions only the triggering activities of the whole process.