Some scalability tests on Azure Functions

Functions is the server-less compute service of Microsoft Azure. The horizontal scalability is one of its key features and I want to understand how it behave on the Consumption Plan, the best choice to allow Azure Functions to scale-out as needed.

Not all kind of workload are suitable for Functions. To achieve high scalability, the workload must not be a single long running job. It does not fit well with Functions. Long running jobs have time restrictions and faces unexpected timeout issues. On the other side, short jobs can run in parallel, on the same host or on different hosts, dynamically created and assigned by the Functions runtime environment.

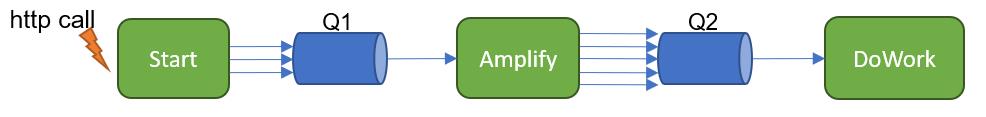

Dealing with a workload composed by many small-short-running jobs, a very helpful approach is the use of a queue system like Azure Queue or Service Bus Queue. These services can be configured to act as the trigger of execution of the function. Elements in the queue are automatically dequeued in parallel and delivered for processing to different instances of the same function.

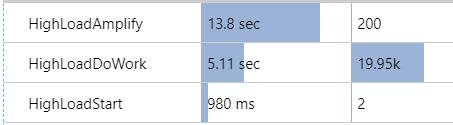

In my simulation, I created a fake micro-job. Its duration was 5 seconds with a CPU load of about 20%. Using a sequence of function-queue-function, I processed 20.000 of these fake jobs corresponding to a total execution time of 20000 x 5 s = 27.7 hours.

“Start” function enqueues 100 messages in Q1. “Amplify” for each message in Q1, enqueues 100 messages in Q2. “DoWork” executes the fake job. This results in 10.000 runs of DoWork.

In my simulation I triggered “Start” 2 times in few seconds resulting in 20000 job eventually processed by DoWork.

The results are astonishing. The whole processing activity only required 7.5 minutes instead of 27 hours, with a time reduction of 99.6%.

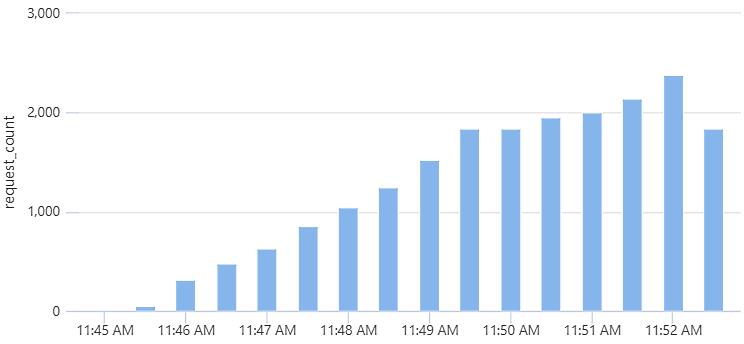

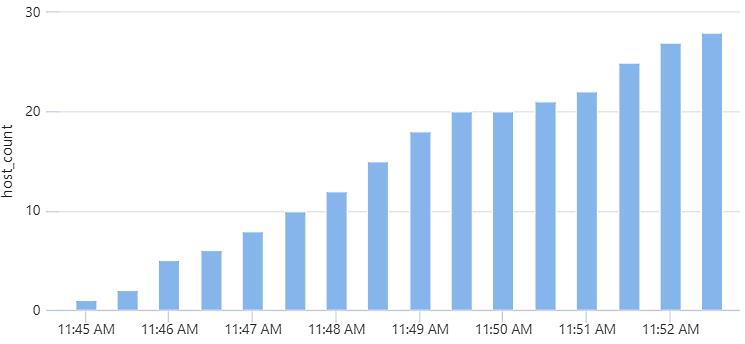

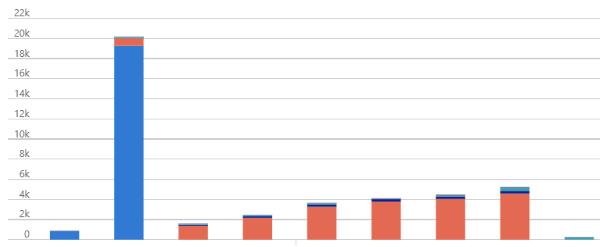

Using Application Insights data, I created a couple of charts of the requests processed and the number of hosts involved - i.e. instance of the same function running on different machines.

Looking at the chart we can see how Azure Functions runtime dynamically adapts the number of instances dedicated to processing requests. The adaptative process is not immediate and requires time.

If I look at the Azure Storage Queue statistics, in the first phase 20200 message have been pushed in (blue). Then DoWork function instanced consumed all the items (red).

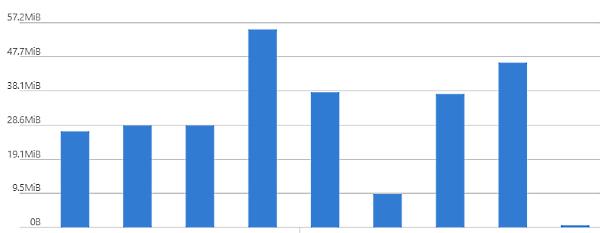

I also noticed interesting data in Azure Storage Files statistics. Considering that Functions applications files (dll, json, etc) are stored in a Files share, the egress statistics show readings caused by new hosts assigned to run my functions.

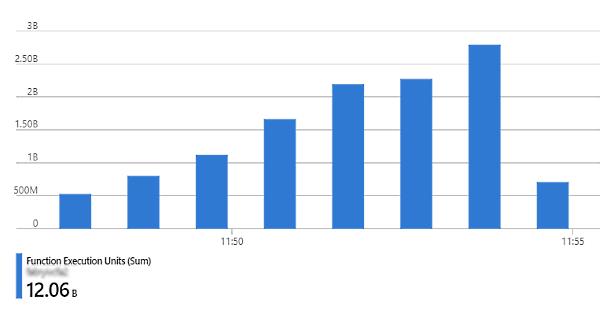

Regarding execution costs, the whole run required 12 B “function execution units” equivalent to 12000 GB-s, corresponding to 12000 x 0.000014 = 0.168 €

Conclusions

If you have a workload that can be split in relatively short-running sub-jobs, Azure Functions - on consumption plan - should be considered. In such a situation it behaves better than traditional approaches like Virtual Machines or Web Application. The strong horizontal scalability and server-less architecture allows you to process heavy workloads, very fast and at a very low cost.